.png)

Show value or die early: The future of AI adoption

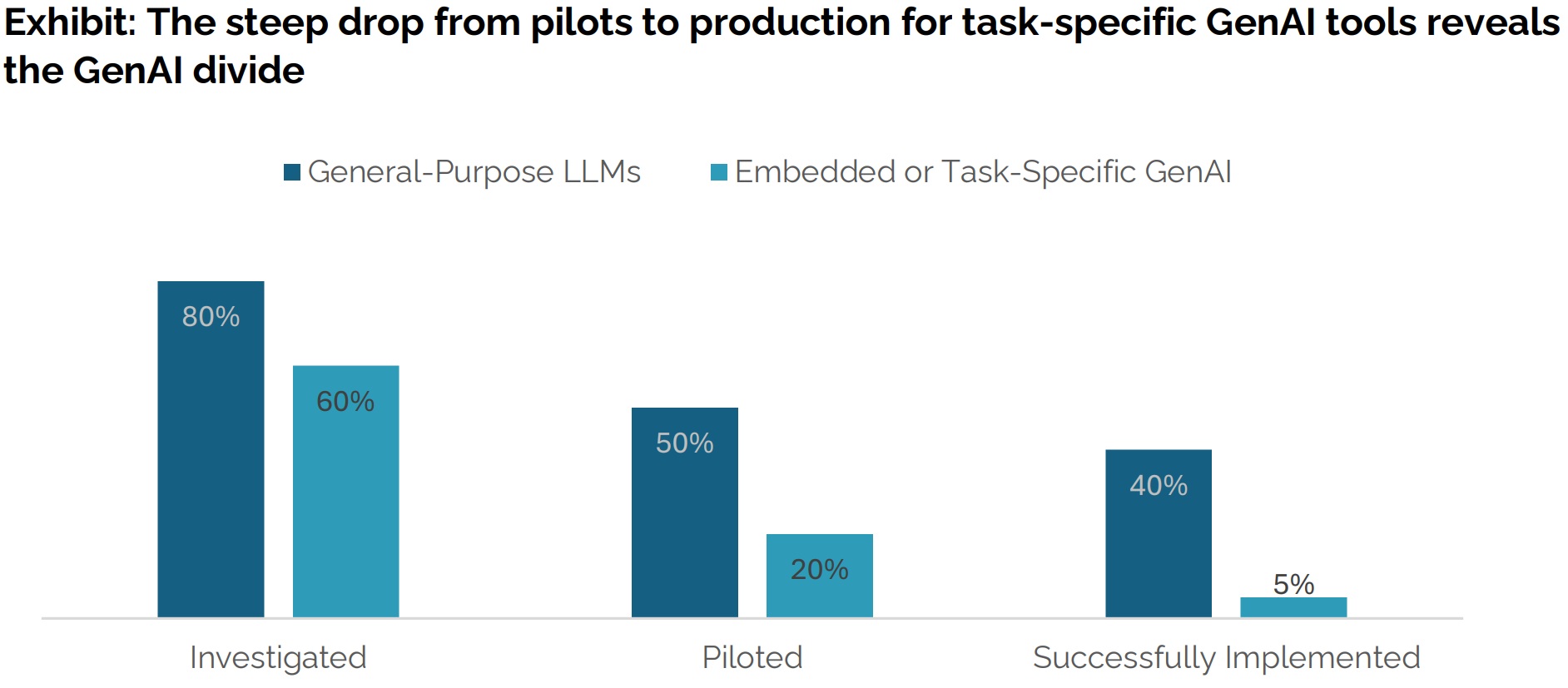

According to MIT’s Project NANDA, 95% of AI pilots deliver no measurable business value. Most generative AI projects stall before they ever reach real users or impact real metrics because organizations treat AI pilots as experiments without consequences. They are launched without a plan for validation, value, or kill criteria.

High adoption, low transformation. Sound familiar?

We need a different mindset: design every AI pilot to either prove its worth early or die early. There's no in-between.

The July 2025 report titled "The GenAI Divide: State of AI in Business 2025" explains that the outcomes are so starkly divided across buyers (enterprises, mid-market, SMBs) and builders (startups, vendors, consultancies) that we call it the "GenAI Divide". The divide is defined by high adoption but low transformation.

HyperFRAME Research found that only around 13% of organizations are truly prepared to deploy autonomous agents despite 83% claiming AI as a strategic priority. The majority still treat AI as a feature, not an operating capability. This mismatch creates what researchers call a “strategic mirage”: leaders talk about transformation, while teams build prototypes no one owns. Moving past the prototype era means building accountability, integration, and KPIs into every AI initiative. Agent-readiness is an organizational maturity test.

A lesson from the field: Deloitte’s six figure misstep

Recently Deloitte faced public backlash after delivering a high-profile government report in Australia that contained fabricated citations, non-existent sources, and a quote from a court case that never existed. The report, partially drafted using generative AI, passed internal review and made it to publication before the errors were exposed. Deloitte later refunded a portion of the AUD 440,000 fee and reissued the report.

This incident wasn’t a failure of AI. It was a failure of process.

There was no human accountability line, no verification gate, and no clear ownership of truth. AI was treated as a shortcut, not a tool within a disciplined workflow.

It’s a powerful reminder: If AI is used without validation, governance, and clear responsibility, it doesn’t accelerate work but amplifies risk.

This is why AI pilots must be built with the same rigour as products. If an AI initiative can’t be verified, trusted, and owned, it shouldn’t move forward.

So let's take a look at what needs to be done.

1. Stop framing AI adoption as vague "exploration" or "pilots" and frame them as decisions.

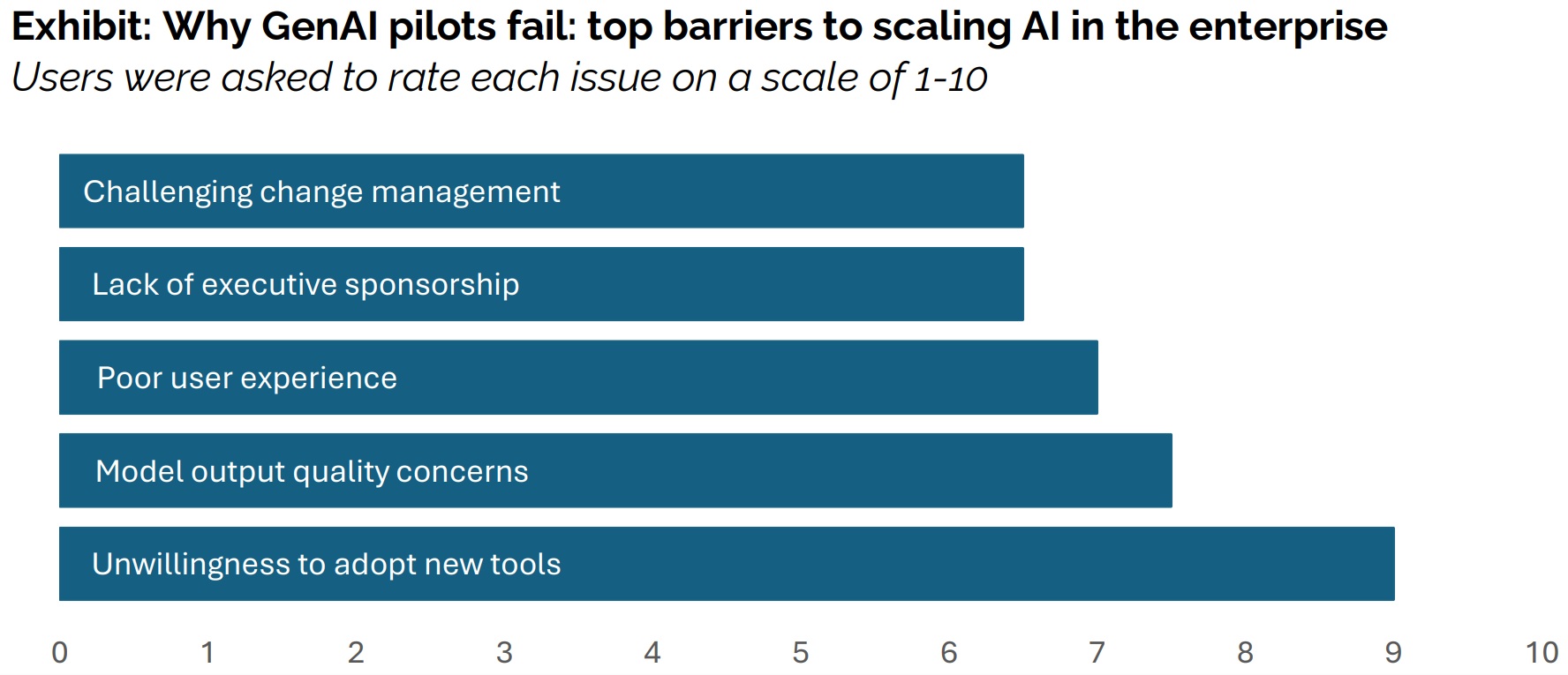

Most AI pilots are built to test capabilities, not outcomes. That’s why they linger. Before you even begin building, ask one question: “If this disappeared tomorrow, who would care?” If no team, no role, and no process would feel the loss that pilot has no reason to exist.

You invest time and resources, reveal somewhat working prototypes, collect praise, then… nothing. Projects stagnate in pilot purgatory. The root is weak decision boundaries and missing accountability. Without explicit criteria for success or shutdown, pilots linger and waste momentum.

McKinsey’s recent analysis makes it clear thatmost organizations are stuck in the “pilot playground.” They deploy chatbots and copilots, but never redesign workflows to let AI act autonomously. The next competitive edge is about becoming agent-ready, where AI can perform tasks, make decisions, and trigger actions in real business processes. McKinsey warns that impact only emerges when AI shifts from passive suggestions to active execution. Until AI is treated as part of the operating model, not a side experiment, it will remain a demo.

The next competitive edge is about becoming agent-ready

Integrate pilot design into strategy sprints and use change acceleration models to inject discipline early. The pilot isn’t a standalone sandbox but an outcome in a 4–8 week strategy sprint where stakeholders co-define what “success” looks like and build the smallest version capable of proving it. That sprint includes not just prototype development, but stakeholder alignment, metrics definition, and change planning. Simultaneously, layer a change acceleration mindset anticipating resistance, aligning teams, and defining kill criteria. That way, from day one, the pilot is governed by structure: either it evolves and scales, or it is terminated decisively. This prevents pilots from bleeding into sunk-cost inertia.

2. Introduce a “value gate” before any work starts

Instead of jumping into experimentation every AI pilot should answer three things up front:

- Who benefits? A real user or team, not “the business.”

- What changes? Faster work, lower costs, fewer errors, something concrete.

- How will we measure it? A KPI or measurable outcome, not “positive feedback.”

If you can’t answer these, you’re not doing a pilot. You’re building a presentation.

3. Measure AI like a product, not a demo

Demo success is easy: people nod, technology works, applause follows. Product success is harder and that’s why so few pilots survive.

A demo only needs to impress, a product must perform. In product development, pilots are built with users, metrics, and a path to rollout. Success isn’t applause, it’s impact: fewer clicks, faster work, real savings. Yet many AI pilots stop at “look what it can do,” never asking, “does anyone actually use this?”. A chatbot that answers questions in a slide deck is a demo. A chatbot that replaces 200 helpdesk tickets a week is a product. Until AI is treated with product discipline (user validation, performance targets, integration plans) it will stay in prototype limbo.

CIO.com highlights a brutal contradiction: 92% of tech leaders plan to implement AI by the end of 2025, but up to 60% will abandon their initiatives due to lack of “AI readiness.” Data infrastructure, governance, security, and change management are missing but pilots proceed anyway. This readiness gap explains why so many AI projects stall right after prototypes. If AI cannot be integrated, monitored, or trusted within a real workflow, it has no path to value. Being “agent-ready” starts with fixing the foundations, not launching more prototypes.

Replace these measurements:

- From: “It works.” → To: “It reduced a task from 30 minutes to 5.”

- From: “People liked it.” → To: “70% of users now choose it over the old process.”

- From: “The prototype is impressive.” → To: “It removed 2 manual steps per workflow.”

Internal tools still need adoption. If people don’t use it, it didn’t succeed.

4. Build small, integrate early, scale only after proof

The longer an AI initiative sits alone as a sandbox experiment, the higher the chance it dies there.

A practical lifecycle looks like this:

- Try — Test the value with real users, on real work.

- Integrate — Embed it into one real workflow or system.

- Measure — Track whether it actually improves anything.

- Expand — Only after impact is proven, scale it elsewhere.

Skipping integration and measurement is the fastest path to failure. Failing in integration or measurement is a fast way to see if the project should go on to scale, evolve and iterate -or get killed.

5. Write kill criteria with courage

The most mature AI organizations aren’t the ones with the most pilots.

They’re the ones willing to kill things quickly.

Define early shutdown rules, such as:

- If it doesn’t improve speed or quality within 90 days, end it.

- If users avoid it, archive it.

- If manual work stays faster, stop investing.

Killing a weak pilot isn’t failure, it’s resource intelligence.

End up in the successful 5% instead of the AI adoption graveyard

No matter where your organization is on its AI journey (early experimentation, active pilots, or scaling initiatives) this is the moment to pause and check your foundation. Are your AI projects tied to real users, measurable outcomes, and a clear path to integration? Or are they drifting toward the 95% that quietly fade into the AI graveyard?

You don’t need more models, prompts, or prototypes. You need clarity, ownership, and the courage to demand proof. The organizations that succeed with AI aren’t the ones who do the most but the ones who only keep what works.

Make sure every AI initiative you run can earn its place. If it can’t, let it die early.

The future belongs to the 5% who build with discipline, not the 95% who build on hope.

Recommended articles

How to win when your search traffic disappears

Technostress: a name for whatever this fatigue is.